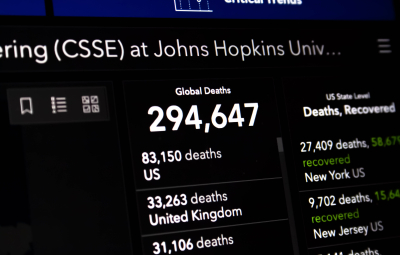

More exposure means more opportunities to mutate. More selection pressure means more variants. This is certainly true for COVID-19 and it might also be true for political rhetoric. The many additional news conferences, press briefings and talk radio slots brought on by the pandemic are perfect conditions for breeding new sound bites and rhetorical tricks. Angry journalists and members of the public provide the selection pressure, weeding out the phrases that fall flat.

Some of my favourites doing the rounds with UK politicians are: ‘We’ve always maintained that ..’ (ideal for appearing to have foreseen all developments, especially when no one is checking), ‘following the science’ (when would you not!) and ‘in the round’ (a business-speak meme that somehow infected the UK cabinet). But the one that bothers me the most is ‘There is no evidence that …’ (insert your rival’s claim about face masks, the covid hit economy, covid transmission in schools and so on).

Why? Because it insinuates that the evidence has been looked for and that the methods used were up to the job. We accept this in good faith. However, a wider, more cynical, interpretation of ‘There is no evidence that …’ covers situations where lack of evidence is down to practical limitations such as crude tools, small data sets or insufficient time to complete a study. I’m sure there are many cases where politicians and their representatives are quoting bonafide studies where ‘no evidence’ really does mean ‘no evidence – and we did our best to find it!’ It’s just that there are other occasions where I worry that we are being mis-sold the facts – occasions where ‘no evidence’ means ‘no evidence – because we haven’t yet looked hard enough’.

While the most dastardly might claim a ‘no evidence’ result for cases where absolutely no work has been done to find it, it is much more likely to be the case that a study has been completed but lacks the power to detect the evidence. Low power can be due to too few data points, poor choice of model or test, or both. It sometimes feels like scientists are less switched on to the danger of low powered statistical tests (delivering us too many false negatives) than they are to problems such as multiple testing (delivering us too many false positives). Perhaps this is because most of the recent statistical angst has been directed towards the false positives in research papers. In science, a positive result is, rightly or wrongly, more highly prized than a negative one. But there are other areas (politics for example) where there are incentives for delivering a negative result.

One way to flush out the ‘no evidence’ trick is to ask about evidence for the opposing view. I might claim, for example, that there is no evidence that a particular coin is biased (withholding from you the fact that this was based on a mere ten flips). If you then ask me whether there is any evidence, based on the same data, that the coin is not biased then I will have to concede that there is not. More formerly we could look at the ratio of the two probabilities (for the coin being biased and the coin being unbiased) – given the data. If the ratio is close to one then there is nothing in it between the two hypotheses. This idea is embodied in likelihood ratios and Bayes factors, and has the great virtue of telling both sides of the story.

Statistics has something very practical then to say to frustrated journalists stuck in press briefings. Faced with a response that begins ‘There’s no evidence that…’ choose one or both of the following for follow-up question(s):

‘What studies have been done to look for that evidence?’ and/or

‘Is there any evidence that the opposite is true?’